Risks and Rewards of AI Hiring Tools

In an effort to streamline the hiring process and make it easier for all company stakeholders with conflicting schedules to participate, some companies have begun using Automated Video Interviews (AVIs), also known as pre-recorded interviews that are examined by artificial intelligence (AI). It’s estimated that 86% of employers use AVIs as part of their hiring process. And according to HireVue, one of the leading providers of AVI services, over 20 million interviews were conducted on its platform in 2021. But to get the most out of AVIs, employers need to accurately assess the risks, rewards and possible legal ramifications of using this technology.

How AVIs Work

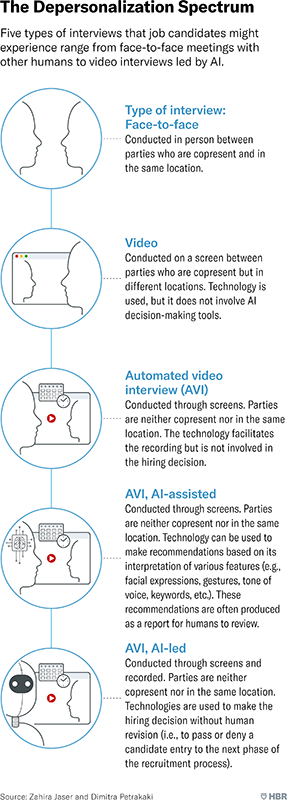

At its core, an automated video interview (AVI) is a prerecorded interview but it is also a technology that can process and assess video interview data without the input of humans. The machine collects and processes biometric data from the candidate’s interview, such as facial expressions, language, and tone of voice. The recorded video is then evaluated by AI algorithms to determine the candidate's suitability for the position. The candidate isn’t usually allowed to review the video before it’s sent for machine processing. Here is a video that gives a decent explanation of how AVIs work.

AVI Benefits

Many companies have a growing preference for automated video interviews (AVIs) because of the following:

They can quickly assess the suitability of a candidate in the early rounds of interviews without face-to-face meetings. If there are thousands of job candidates for a single job opening, it makes more sense to use an AVI to quickly weed out those who don’t meet basic requirements.

It removes scheduling as an obstacle. If there are multiple stakeholders in the hiring process, it’s simpler to have them view a single video interview than to schedule everyone for a specific date, time, and place.

It makes comparing candidates easier as hiring managers only need to refer to the AI assessment report or the video to see how different people answered the same questions.

It’s easier to standardize the interview process if it is managed by a machine and not individual people. Questions and assessment parameters can be set and applied equally to all candidates as long as the technology doesn’t have built-in biases — and we’ll cover that a little more later.

Despite the efficiency of AVIs, there is a risk of inaccuracy, data misappropriation, and potential bias in this technology.

AVI Legal Risks

Artificial Intelligence (AI) is a rapidly developing industry and applications that utilize AI have proliferated in almost every industry. But the legal framework in which these new technologies operate hasn’t completely adapted — that’s beginning to change. One of the biggest risks that businesses take on when adopting tech that leverages AI is that they may get entangled in legal trouble as new laws are passed to govern how the technology can be used. It’s important to understand that automated video interviews (AVIs) use artificial intelligence to gather biometric data and assess it, but that data might also be used by data miners for other purposes, some of which employers may never imagine.

In 2022, there was a class-action lawsuit brought against a hiring platform because they collected biometric information for analysis in violation of Illinois’ Biometric Information Privacy Act (BIPA).

“BIPA requires private entities, including employers, that “collect, capture, purchase, receive . . . or otherwise obtain” biometric identifiers or information, such as face geometry, in Illinois, to satisfy three requirements. First, the entity must inform the person in writing that a biometric identifier or biometric information is being collected or stored. Second, the entity must inform the subject in writing of the specific purpose and length of term for which a biometric identifier or biometric information is being collected, stored, and used. And third, the entity must receive a written release from the subject.

BIPA also requires entities, including employers in Illinois, that process biometric identifiers or information to develop and publicize a written policy establishing a retention schedule and guidelines for permanently destroying the biometric identifiers or information. BIPA prohibits entities, including employers in Illinois, from selling or otherwise profiting from a person’s or customer’s biometric identifiers or biometric information.”

Texas, Illinois and Washington State all have broad biometric privacy laws on the books, while Portland, OR and New York City have limited biometric privacy rules. California, Colorado, Connecticut, Utah, and Virginia have all passed comprehensive consumer privacy laws that, once in full effect, will expressly govern the processing of biometric information. Every business should seek legal guidance before using AVI tools since they collect biometric data.

EEOC Warning

The U.S. Equal Employment Opportunity Commission (EEOC) has warned employers that using “algorithmic decision-making” technology as part of their hiring process may be in violation of the Americans with Disabilities Act (ADA).

”The most common ways that an employer’s use of algorithmic decision-making tools could violate the ADA are:

The employer does not provide a “reasonable accommodation” that is necessary for a job applicant or employee to be rated fairly and accurately by the algorithm.

The employer relies on an algorithmic decision-making tool that intentionally or unintentionally “screens out” an individual with a disability, even though that individual is able to do the job with a reasonable accommodation. “Screen out” occurs when a disability prevents a job applicant or employee from meeting—or lowers their performance on—a selection criterion, and the applicant or employee loses a job opportunity as a result. A disability could have this effect by, for example, reducing the accuracy of the assessment, creating special circumstances that have not been taken into account, or preventing the individual from participating in the assessment altogether.

The employer adopts an algorithmic decision-making tool for use with its job applicants or employees that violates the ADA’s restrictions on disability-related inquiries and medical examinations.”

The EEOC provides a questionnaire to help employers determine if their use of algorithmic decision-making tools is in violation of the ADA.

Legal Cases Relevant To AVI

In 2018, it was reported that Amazon scrapped its automated hiring tool because it was biased against women. According to a Reuters article, the hiring program downgraded women candidates who used “feminine” language or who attended all-women colleges because the data-set on which it was trained had inherent biases against some characteristics stereotypically associated with women.

In 2020, a Harvard publication reported that facial recognition technology had a 20% to 34% accuracy rate when looking at dark-skinned faces compared to a 90% accuracy rate when looking at light-skinned faces.

And in 2021, HireVue removed facial analysis from its offerings because “"Facial expressions are not universal—they can change due to culture, context and disabilities—.” It was also facing a class-action lawsuit.

AVI Best Practices

Automated Video Interviews (AVIs) are not going away. This is a technology that is, in many ways, still in its infancy. And because of that there may be undiscovered flaws that can harm a business’ reputation and put it in legal jeopardy. Employers should begin by asking the following questions:

Could this tool have an adverse impact on candidates who belong to a protected class (women, racial minorities, disabled etc.)?

Is biometric information handled in accordance with federal/state/local privacy laws?

How secure is the biometric data?

How long will biometric data remain on the software company’s servers?

Before any business incorporates AVIs into their hiring process they need to work with an attorney to determine if any aspect of that technology or their use of that technology could be in violation of the law.

Seattle Bankruptcy Attorneys

Do you have questions about and bankruptcy and business law? Contact the experienced Seattle bankruptcy attorneys at Wenokur Riordan PLLC today at (206) 724-0846 to discuss your situation.